As debate rumbles on about how and how much poor statistics is to blame for poor reproducibility, Nature asked influential statisticians to recommend one change to improve science. The common theme? The problem is not our maths, but ourselves.

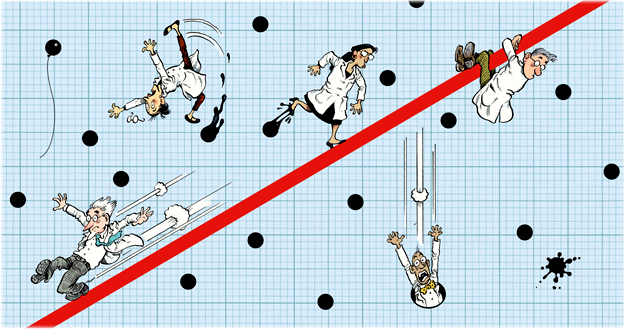

To use statistics well, researchers must study how scientists analyse and interpret data and then apply that information to prevent cognitive mistakes. In the past couple of decades, many fields have shifted from data sets with a dozen measurements to data sets with millions. Methods that were developed for a world with sparse and hard-to-collect information have been jury-rigged to handle bigger, more-diverse and more-complex data sets. No wonder the literature is now full of papers that use outdated statistics, misapply statistical tests and misinterpret results. The application of P values to determine whether an analysis is interesting is just one of the most visible of many shortcomings. It’s not enough to blame a surfeit of data and a lack of training in analysis. It’s also impractical to say that statistical metrics such as P values should not be used to make decisions. Sometimes a decision (editorial or funding, say) must be made, and clear guidelines are useful. The root problem is that we know very little about how people analyse and process information. An illustrative exception is graphs. Experiments show that people struggle to compare angles in pie charts yet breeze through comparative lengths and heights in bar charts. The move from pies to bars has brought better understanding …

[Keep on reading this commentary on Nature]